A question I get from a lot from bloggers is “How do I know that Google sees my site?” And the short answer is: as long as you have the privacy settings set correctly (In your Blogger Dashboard under Settings >> Basic) Google can find you.

The long answer, however, is a little more complicated.

Google really likes pages that are highly trafficked with quality incoming links. Typically a post that is promoted to your readers and is shared on social media will gain enough traction that search engines will have no trouble finding it at all. As long as you have followed all the SEO optimization guidelines, there is really not much more you need to do.

But what about those older posts that you never got around to optimizing until after your initial sitemap submission? Or what if you do a major blog overhaul and your URLs have changed? Or if you have had an addition of huge amounts of new content all at once? Or you have rebranded and want your new site to be indexed? Or you have added a lot of PAGES (not posts) that aren’t included in your navigation menus? Or if you submitted your map more than a year ago and now the sitemap requirements have changed?

Those are all scenarios when submitting additional sitemap to Google may be beneficial to you and I would definitely submit a revised sitemap. And while I don’t necessarily discourage submitting sitemaps on a regular basis for no real reason (Google says outright you will never be PENALIZED for submitting multiple sitemaps) I don’t really encourage it either.

For the VAST MAJORITY OF BLOGGERS, REPEATEDLY GENERATING AND SUBMITTING A SITEMAP WILL HAVE LITTLE TO NO IMPACT ON YOUR SEARCH RANKING. I DO advise bloggers to submit an initial sitemap once they have optimized all their content, just to give crawlers a little push to index your site, but after the initial submission it is definitely a diminishing return to submit another.

So how do you submit a sitemap? Well.. let's start with the basics:

What is a sitemap?

There are actually more than one type of sitemap. When most people are talking about sitemaps that have to do with SEO, they are talking about XML sitemaps. This is the type of sitemap I’ll be explaining, but I would be remiss if I didn’t also mention the other type of sitemap.HTML Sitemaps: A Visual Index

Another type of sitemap is the HTML sitemap. This is like an index of all your blog content. It LITERALLY takes your post list and creates an HTML list of posts which you can modify to become a menu type list. You often see these in the bottom bar of large sites. (Here is a screenshot of the bottom of the Home Depot home page as an example.)

Some blog designers will generate and install them for blogspot clients. And often bloggers are confused since they now think their site has a “sitemap” and is SEO friendly. That is not how HTML sitemaps work. They are really for nothing more than reader navigation, they have little to do with SEO. I personally think they are a giant waste of time.

Blogger is designed with such a straight forward URL and category structure, nobody should need a sitemap to find your content. (If that is the case, I think you should consider a blog redesign.) The difference between a blogspot blog and say, The Home Depot website is that the URL structure is completely different. Blogger blogs are set up chronologically and a sitemap is just a glorified version of your archives widget.

On the other hand, the Home Depot website has a web-type structure, where products are divided into small and smaller subcategories. Their site map is very different, and probably more useful to site users (and their sitemap is highly stylized):

One of the biggest drawbacks to HTML sitemaps is that they do NOT automatically update. Every time you add a new post you would need to generate a new sitemap and replace you old one. Which brings me back to the point that using your archives widget is just as (if not more) effective for navigation to old content (along with category/label driven menus.)

XML and RSS/Atom Sitemaps: Search Engine Roadmaps

XML and RSS/Atom sitemaps are a different thing entirely. XML file types (Extensible Markup Language) are both human and machine readable. You can generate a sitemap and submit it to search engines and they will use it as a “roadmap” for crawlers to index content on your site. (Google actually doesn’t guarantee it will use your sitemap, but it can only benefit you to have one just in case!) You also can generate an updated map by having Google crawl a map based on your RSS feed. Google suggests that you use BOTH!Blogger is a very unique platform in that the generation of sitemaps is extremely simple to do. In 2016 Blogger began automatically generating XML sitemaps like this:

http://www.YOUR-SITE-NAME.com/sitemap.xmland for your non-post pages, make sure the map is:

http://www.YOUR-SITE-NAME.com/sitemap-pages.xml

To tweak the RSS sitemap it is very straight forward. There is actually a standard code you can add to generate an RSS sitemap from your URL, but I personally love this Blogger Sitemap Generator, which will do it automatically and it will adjust for both a custom URL or the standard blogspot one. You just need to type your full url plus the number of posts you have on your site (you can find that number on the “posts” tab or your blogger dashboard.

IN 2016 THE SINGLE SITEMAP PAGE LIMIT FROM AN RSS FEED WENT FROM 500 TO 150 SO MAKE SURE YOUR URLS ARE ENDING IN "150" OR ELSE YOU ARE SUBMITTING MAPS THAT WILL NOT BE COMPLETE!

To generate your sitemap you go to the generator and insert your url and it will spit out your sitemap.

SERIOUSLY. It is that easy! Here is the example for this blog. The sitemap is the single URL listed under “Blogger Atom Feed Sitemap:"

If your blog is larger and has more posts, you will have multiple maps since the limit is 150 URLS per blog:

Submitting Your Sitemaps

Now that you have your map(s), what do you do with them? You have two options. You can add your sitemap to your robots.txt file which tells crawlers which URLs to crawl on your site, OR you can submit them to webmaster tools.I personally do NOT like the robots option, but it is much more straight forward if you don’t have a Webmaster Tools account set up. The problem is that you need to type the code in (or copy and paste it) and any mistake will mess it up. If you are careful, it shouldn’t be a problem, but it just isn’t my favorite method

Using Robots.txt

To submit your sitemap via the Robots.txt box in your dashboard (Under Settings >> Search Preferences) you just need to copy the following code and replace “your-blog-url” with your blog URL and the information between the asterisks with the atom feed sitemap code you generated above.User-agent: * Disallow: /search Allow: / Sitemap: http://YOUR-BLOG-URL.com/sitemap.xml Sitemap: http://YOUR-BLOG-URL.com/sitemap-pages.xml Sitemap: http://YOUR-BLOG-URL.COM/*atom.xml-blah-blah-blah=150* Sitemap: http://YOUR-BLOG-URL.Com/*atom.xml-blah-blah-blah=150*generated by the sitemap generator and paste it into the custom robots.txt box:

Then save and that is it!

Then save and that is it!The reason I don’t like this method is that you don’t get any sort of confirmation that your code is correct and no real way of tracking whether your sitemap has been crawled. It also requires that you remember to manually go in and add the extra maps when you go over 500 posts. The perk of this method is that it also works for search engines other than Google.

Using Webmaster Tools

When I submit my sitemaps I always use the Webmaster Tools sitemap submission. If you don’t have a webmaster account set up or haven’t added your blogs, you definitely should. (I’ll share some other great tips for using webmaster tools in future posts!)To submit a sitemap, select your blog from your properties list and select crawl >> sitemaps. In the upper right corner is an orange button to submit a new sitemap.

A pop up box will appear and you need to paste in the sitemap url you generated in the sitemap generator NOT INCLUDING "http://www.yourblogname.com/“

For the XML sitemap you want to ONLY paste in "sitemap.xml" and for the RSS feed maps you only want to paste in “atom.xml blah blah blah” and hit SUBMIT.

If you have more than one map you want to repeat this for the second URL.

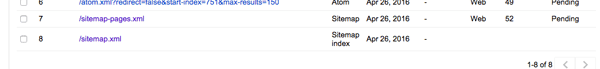

Once you have submitted them all, you want to refresh the page in order to see if the sitemap was valid. On the bottom of the page it will show you a list of sitemaps as well as how many pages have been submitted and indexed for each one. Depending on you site’s site and traffic, the map should be completely indexed within a few days, and even quicker if your site is already on Google’s radar.

For the XML sitemap the submissions will look like this:

If you click on the sitemap index it will actually show you how many posts are contained in all the subsections that map as well (and if you have done it correctly, it will look just like the RSS map)

And that is all there is to it! Google now has a map of your content, and can index it for search. Google says it can take up to 7 days to crawl a new map, but I've found it was much faster than that. For my blog (which is fairly highly trafficked) it takes less than 24 hours:

And to see how your your site is being indexed overall you can check under the Google Index >> Index status page of Webmaster tools.

You want to see a slow and steady incline in pages being indexed (as you generate more content). Sometimes, after a sitemap submission you may see a little spike in indexing, but long term the slope should be the same. (The ‘peaks’ in your crawler status are from content being indexed and then de-indexed. That is why there is not a huge long term benefit of submitting sitemaps unless your content is SEO optimized and crawled regularly even without a map!)

Of course, this is ONLY Google’s search engine. Unlike the Robots.txt method, you may also want to submit your maps to other search engines like Bing (which shares its algorithm with Yahoo). Although we all know that Google is king!

No comments:

Post a Comment